Overview

Trend following is a family of systematic strategies that attempt to align with persistent price moves rather than anticipate turning points. Volatility is the statistical variability of returns. The intersection of the two determines how a strategy experiences drawdowns, how it sizes positions, and how it adapts when market conditions change. A trend follower that ignores volatility exposes the portfolio to uneven risk through time, since the same nominal position can deliver very different outcomes across regimes. A volatility-aware trend follower targets a steadier risk profile by adjusting exposure in response to changes in the distribution of returns.

This article defines trend following and volatility with care, then builds the logic for integrating the two into a structured, repeatable trading system. It addresses measurement choices, risk management, and a high-level operational example. It avoids precise trade signals, explicit prices, and recommendations.

Defining Trend Following

Trend following focuses on measurable features of price that suggest directional persistence. Instead of forecasting fair value, it reacts to evidence that price has been moving consistently upward or downward. Common proxies for trend include moving averages, momentum calculations, and breakout concepts. These tools smooth or summarize past prices to identify the direction and magnitude of recent movement.

Two design principles are central. First, the system treats the market as a source of serial correlation, albeit one that can be weak and intermittent. Second, the system enforces rules for entry, sizing, and exit that operate the same way across instruments and time. The goal is not to catch every swing. It is to capture part of sustained moves while keeping losses contained during choppy periods.

Defining Volatility

Volatility describes how much returns vary around their mean. It can be observed statistically through realized measures or implied via options markets. In systematic trading, realized volatility estimators are most common because they can be derived from historical prices without requiring an options market.

Several realized estimators appear frequently in practice. Closing price standard deviation summarizes return dispersion over a lookback window. True range based measures incorporate intraday highs and lows to account for gaps and overnight moves. Range estimators such as Parkinson or Garman–Klass use high and low information in different ways. Each estimator trades off responsiveness and stability, and each can be computed on multiple timeframes.

Volatility is not constant. It clusters, often rises during market stress, and can be asymmetric between down and up markets. These features create operational demands for a strategy that seeks to maintain a consistent risk budget through time.

Why Volatility Matters to Trend Followers

Volatility shapes both signal quality and risk. When volatility is low and trends are smooth, signals based on price persistence often experience fewer whipsaws. When volatility spikes, price can reverse quickly and repeatedly, which increases stop-outs and raises transaction costs. High volatility also amplifies the dollar impact of a given position size. A fixed number of shares can expose the portfolio to very different daily moves in quiet versus turbulent regimes.

Integrating volatility into a trend strategy can serve three purposes. First, it provides a common unit of risk, which allows position sizes to be scaled so that each position contributes proportionally to portfolio variance. Second, it can act as a filter that prevents trading during extremely noisy conditions or, alternatively, during exceptionally quiet conditions when signal-to-noise may be poor. Third, it shapes exit logic by informing how far a trailing stop or exit threshold might be placed relative to typical price fluctuations.

Core Logic of a Trend-Following-with-Volatility Strategy

A volatility-aware trend strategy can be described as a sequence of modular decisions that are applied consistently:

- State estimation. Estimate trend direction and strength using a transparent, rules-based calculation. This may involve smoothing or breakout style logic that identifies whether price has shown sustained directionality over a chosen horizon.

- Volatility estimation. Estimate current volatility over a comparable or slightly shorter horizon. The estimator should be robust to outliers and gaps, and its sampling frequency should match the trading frequency of the system.

- Position sizing. Translate trend conviction and volatility into position size, usually by targeting a risk contribution or a volatility-adjusted unit size. Instruments with higher estimated volatility receive smaller nominal sizes to equalize risk.

- Risk control. Set protective exits and portfolio-level constraints that are anchored to volatility, such as stop distances or maximum loss thresholds measured in risk units rather than price points.

- Exit and de-risking. Reduce or close positions when the trend weakens, reverses, or when volatility reaches levels that violate the system’s risk budget. Exits are rules-based and executed consistently.

These steps produce a framework that is systematic and repeatable. If two instruments display the same trend and volatility profile, the system responds the same way to both, subject to liquidity and cost constraints.

Measuring Trend and Volatility

Measurement choices strongly influence behavior. Trend filters can be constructed from moving averages, rate-of-change measures, regression slopes, or breakout distances. Shorter windows adapt quickly but generate more false signals in noisy markets. Longer windows are slower but can ride larger trends. Blending windows can moderate these extremes but adds complexity.

Volatility estimators require similar tradeoffs. Rolling standard deviation of returns is intuitive and easy to compute. True range based estimators capture gaps, which matters for instruments that trade around the clock or have regular overnight moves. Range-based estimators that use high and low prices can be more efficient under certain statistical assumptions but can be sensitive to data quality. The sampling frequency should reflect the execution horizon. A daily system that trades end of day generally aligns volatility calculations to daily bars rather than intraday bars.

Stability is crucial. A very short volatility lookback will swing position sizes aggressively from day to day, which can create unnecessary turnover and cost. A very long lookback reacts slowly to regime shifts, which can leave the system under- or over-exposed. Some designers apply smoothing to the volatility estimate itself to temper these effects.

Using Volatility as a Filter

Volatility can be used to determine whether a signal is tradable under prevailing conditions. Two common ideas appear in practice. First, extremely low volatility may indicate that an apparent trend is more likely a statistical artifact of noise, especially on shorter timeframes. Second, extremely high volatility may indicate that price is dominated by jumps and reversals rather than smooth follow-through. A rule set can decline to open new positions when volatility falls outside a predefined band. That choice introduces a selection effect which can improve or degrade results depending on the market and timeframe, so it requires careful testing.

Position Sizing with Volatility Scaling

Volatility scaling converts heterogeneous instruments into comparable risk units. The goal is for each position to contribute a similar amount of expected variability to the portfolio. Conceptually, position size is set so that the product of size and estimated volatility equals a target risk contribution. If one instrument’s volatility is twice another’s, its nominal size is halved so that both carry similar risk. This does not eliminate risk. It shifts the emphasis from price points or contract counts to a standardized risk measure.

Several practical choices arise:

- Target risk level. The strategy defines a maximum per-position risk contribution and a total portfolio risk budget. These targets reflect acceptable drawdown and capital constraints.

- Smoothing. The volatility estimate used for sizing can be smoothed to reduce turnover in position sizes. Smoothing dampens reaction to one-off spikes.

- Caps and floors. Minimum and maximum position sizes prevent exposures from vanishing in very high volatility or from becoming excessively large in very low volatility.

- Discrete units. Instruments that trade in fixed lots or have minimum position increments may require rounding rules that preserve risk balance without introducing bias.

Volatility scaling is path dependent. As volatility changes, the system rebalances. During rising volatility, position sizes shrink, which can mechanically reduce drawdowns. During falling volatility, sizes grow, which can improve participation in smoother moves. The net effect depends on the interaction between volatility dynamics and trend persistence.

Exits and Volatility-Conscious Risk Controls

Exits are often defined relative to volatility. A trailing exit that moves with price may be placed at a distance that reflects typical daily ranges, so that normal fluctuations do not trigger premature liquidation. Time-based exits are another option, closing a position after a fixed holding period if trend evidence has weakened. Some systems combine both methods, for example by using a structural trend reversal as the primary exit and volatility-based levels as a protective backstop.

Portfolio-level risk controls complement trade-level exits. Examples include a daily loss limit measured in risk units, a maximum drawdown threshold that triggers de-risking, and correlations-aware limits that reduce exposure when assets move together more than usual. Since correlations tend to rise in stress regimes, a system that is unaware of this feature can unintentionally concentrate risk.

Transaction Costs, Liquidity, and Execution

Volatility interacts with costs and liquidity in several ways. Spreads typically widen and depth thins during turbulent periods, which raises slippage. Frequent resizing due to a very reactive volatility estimate can lead to higher turnover. Systems that operate on volatile instruments or shorter horizons are more sensitive to these effects. Aligning the holding period with the cost structure is part of robust design. For exchange-traded instruments with uneven trading hours, overnight gaps can dominate realized volatility, which affects stop behavior and execution planning.

Backtests that ignore these frictions overstate performance. Cost assumptions should reflect realistic spreads, commissions, and market impact for the intended trade size. Sensitivity analysis that increases assumed costs provides a view of robustness to adverse execution conditions.

Backtesting, Robustness, and Expectancy

A disciplined workflow separates research, validation, and deployment. Historical testing evaluates how the strategy behaves across varied markets and regimes. Out-of-sample testing and walk-forward analysis help guard against overfitting. Parameter stability checks examine whether small changes to lookback lengths or volatility windows produce large and inconsistent performance swings. A strategy that relies on a narrow set of tuned parameters is unlikely to be robust in live trading.

Expectancy for trend strategies often features a lower win rate with larger average wins than losses. Volatility management does not change this fundamental character. It influences dispersion of returns and drawdown shape. Monte Carlo resampling of trade sequences can be used to approximate the range of possible equity curves given the observed distribution of returns. Stress tests that include volatility spikes, correlation surges, and gaps are informative because these conditions drive real-world risk.

Risk metrics should reflect the system’s objectives. Maximum drawdown, volatility of returns, skewness, and downside deviation provide a fuller picture than simple average return. For multi-asset systems, risk contribution by instrument and by sector reveals concentrations that may not be evident from nominal weights.

Regime Awareness and Adaptation

Volatility regimes can persist. Trend signals that work during calm periods can degrade during highly volatile, mean-reverting phases. Some designers include adaptive features such as volatility-conditioned filters or dynamic signal thresholds that tighten during stress and loosen in quiet conditions. Others keep the core signal static and adapt only position sizing. Both approaches are valid, but additional degrees of freedom increase the risk of overfitting. Any regime-aware component should be justified by clear evidence and tested across multiple markets and timeframes.

Regime identification can be as simple as classifying the market as low, medium, or high volatility based on a rolling estimator. More elaborate methods use probabilistic state models. Complexity is not guaranteed to improve outcomes. Clarity and reliability are typically more valuable than marginal improvements in backtested performance.

High-Level Example of Operation

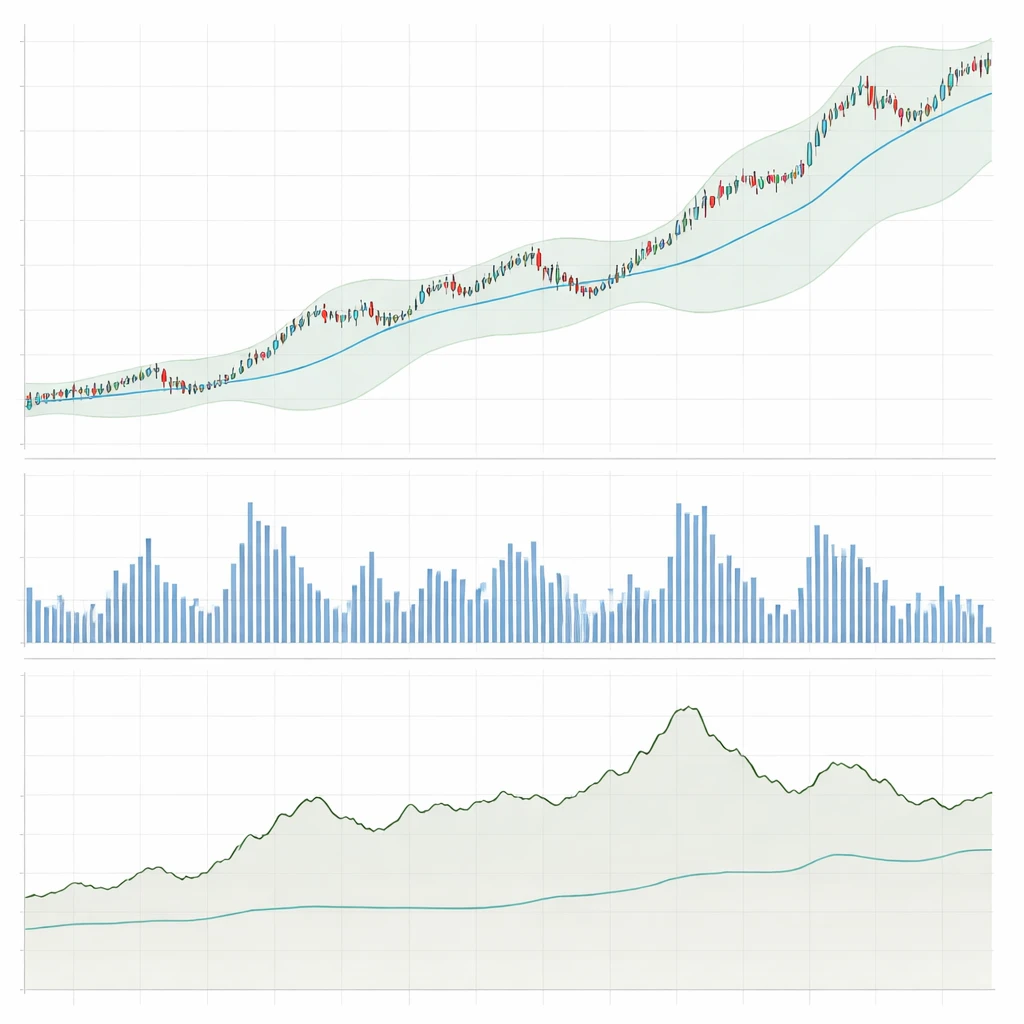

Consider a daily system applied to a diversified set of liquid instruments. The system uses a trend measure that identifies directional persistence over an intermediate horizon and a realized volatility measure derived from recent ranges. The design aims for consistent application across all instruments.

The workflow proceeds in stages:

- Data preparation. Clean and align daily bars with consistent handling of missing values, adjusted prices when necessary, and synchronized timestamps. Validate that highs, lows, and closes are internally consistent.

- Signal computation. For each instrument, compute a trend metric that summarizes whether recent price movement has been upward or downward. Compute a volatility estimate over a window designed to reflect the expected holding period.

- Risk-based sizing. Translate the combination of trend direction and volatility into a position size that targets a fixed risk contribution. Impose caps to prevent excessive leverage during quiet periods and floors to ensure that positions do not vanish completely during stress.

- Risk limits. Apply per-instrument and portfolio-level limits, including a total risk budget and maximum correlation-adjusted exposure. Use a volatility-informed protective exit that reduces exposure if adverse movement exceeds a typical fluctuation band.

- Execution. Place orders in a manner consistent with liquidity and cost constraints. Use a schedule that avoids thin periods when spreads widen significantly for the instrument.

- Monitoring and review. Track realized volatility versus the estimator, slippage relative to assumptions, and risk contributions by instrument. If actual volatility consistently exceeds estimates, consider whether the estimator needs an update or additional smoothing.

In quiet conditions, the system may hold relatively larger nominal positions because volatility scaling permits more exposure for the same risk budget. If a trend persists, gains accrue with lower day-to-day variability. If volatility increases sharply, the system automatically reduces position sizes and may also exit on protective rules if price movement breaches thresholds derived from typical ranges. During choppy intervals with no clear direction, the strategy expects small losses due to whipsaw, which are contained by the combination of volatility-adjusted sizing and exits.

Portfolio Construction Across Markets

Applying the strategy across multiple instruments diversifies idiosyncratic risk. Volatility-aware sizing helps ensure that exposures are not dominated by a single instrument purely because it is quiet. However, cross-market correlations often rise during stress, which can push aggregate portfolio volatility beyond the budget if not controlled. A portfolio module can adjust weights based on both individual volatilities and their correlations, targeting a total risk level rather than independent per-instrument targets. This shifts the focus from instrument-level sizing to portfolio risk control.

Sector or theme limits add an additional layer. For example, a system might limit total exposure to a group of closely related assets if their trends are driven by the same macro factor. These constraints are not forecasts. They are guardrails that keep the system within a defined risk envelope.

Data Integrity and Model Risk

Volatility estimation is sensitive to data issues, especially for instruments that experience corporate actions, contract rolls, or irregular trading hours. Adjusted price series that handle splits or dividends must be validated so that historical volatility is not artificially distorted. Futures rolls require continuous series construction with a transparent roll methodology. Differences in roll choices can change both trend and volatility profiles, which affects signals and risk sizing.

Model risk arises when historical patterns differ from future conditions. A model that relies on a specific window length or estimator might fail when that feature no longer aligns with market microstructure or typical participant behavior. Designs that work across a range of parameters and markets are less vulnerable to this risk.

Behavioral Considerations and Governance

Trend following demands patience through inevitable drawdowns. Volatility-aware sizing can reduce the depth of drawdowns but does not eliminate them. Clear documentation of rules, a defined process for handling exceptions, and pre-specified tolerance bands for performance deviations help maintain discipline. Post-trade analysis that records whether exits were triggered by trend reversals or volatility-based protections informs future improvements without ad hoc changes.

Governance also includes version control for research code, audit trails for parameter changes, and periodic reviews that compare live results with research expectations. These practices support repeatability and reduce the risk of introducing unvetted changes in response to short-term noise.

Variations on the Theme

There are many ways to integrate volatility with trend. Some systems make volatility a gate, trading only when it falls within a pre-specified band. Others modulate signal strength by volatility, for example by scaling the contribution of recent returns by their variability. Still others blend timeframes by using a slower trend component and a faster volatility component so that risk adjusts quickly even if the trend definition changes slowly. Each variation modifies the trade-off between responsiveness and stability.

Another variation involves asymmetric treatment of uptrends and downtrends. If down moves tend to have higher volatility, a system might allocate less nominal exposure when aligned with downward trends to maintain a consistent risk profile. This is not a directional forecast. It is a risk normalization based on observed differences in volatility across directions.

How It Fits a Structured, Repeatable System

A structured system is repeatable because it is explicit. Data inputs, calculations, thresholds, and decision rules are defined in advance. Volatility enters at multiple points in a documented pipeline. It informs position sizing, protective exits, and portfolio limits. Inputs are computed the same way each day. Changes to parameters follow a controlled research process and are not adjusted based on isolated outcomes.

Such a system produces consistent decisions when presented with similar conditions. It is auditable because each decision can be traced to a rule and an input value. It is adaptable because volatility measurements allow the same rules to operate across assets with different price levels and dynamics. The emphasis shifts from guessing the next move to controlling exposure to evolving uncertainty.

Practical Testing Considerations

Testing a volatility-aware trend strategy benefits from multiple lenses:

- Cross-market testing. Evaluate behavior on instruments with different characteristics, such as equity indexes, government bonds, currencies, and commodities. Consistency across these sets is a sign of structural robustness.

- Regime segmentation. Partition history into low, medium, and high volatility regimes and analyze performance separately. Look for deterioration that suggests an overreliance on a specific environment.

- Parameter sweeps. Test ranges for trend and volatility windows to assess sensitivity. A design that functions across a band of choices is less fragile.

- Cost stress. Increase assumed transaction costs to levels observed during stressed markets. Robust designs degrade gracefully.

- Execution realism. Include slippage consistent with instrument liquidity and trade frequency, and model overnight gaps if positions are held over the close.

Limitations and What Volatility Cannot Solve

Volatility management does not create trends. It cannot convert a mean-reverting market into a trending one. It also cannot predict future volatility with precision. Estimators reflect past or recent conditions and will be surprised by shocks. Overreliance on a specific volatility filter can cause the system to sit out profitable periods or trade aggressively in unfavorable ones. Position limits based on volatility can also result in under-exposure during sharp, well-behaved trends that occur with low volatility, which reduces participation.

These limitations argue for clarity in objectives and humility in design. Volatility is a tool for risk normalization and for aligning the system’s exposure with current uncertainty, not a guarantee of superior performance.

Key Takeaways

- Trend following seeks to align with directional persistence, while volatility measures the variability of returns that shapes risk and execution.

- Integrating volatility enables risk-based sizing, regime filters, and exits that adapt to changing market conditions within a consistent rule set.

- Volatility scaling targets comparable risk contributions across instruments, reducing uneven exposure through time without eliminating drawdowns.

- Robust systems prioritize clear measurement, stable parameters, realistic cost modeling, and portfolio-level risk controls that account for changing correlations.

- Volatility management is a risk normalization tool rather than a predictive edge, and its effectiveness depends on disciplined, repeatable implementation.